Problem link h2

Logseq does not have a native way to view the character count of your notes (aka pages)

Logseq’s performance is a balance between the number of pages and the size of each page

Logseq’s graph is difficult to search and prune manually

Possible solution link h2

Encode Logseq’s graph to enable semantic search, then leverage this encoding to develop an automatic pruning tool of some kind.

Implementation link h2

If I want to enable semantic search, then I must embed my graph

If I don’t know the size of my graph or its pages, then there exists a chance that embedding my graph could be very costly

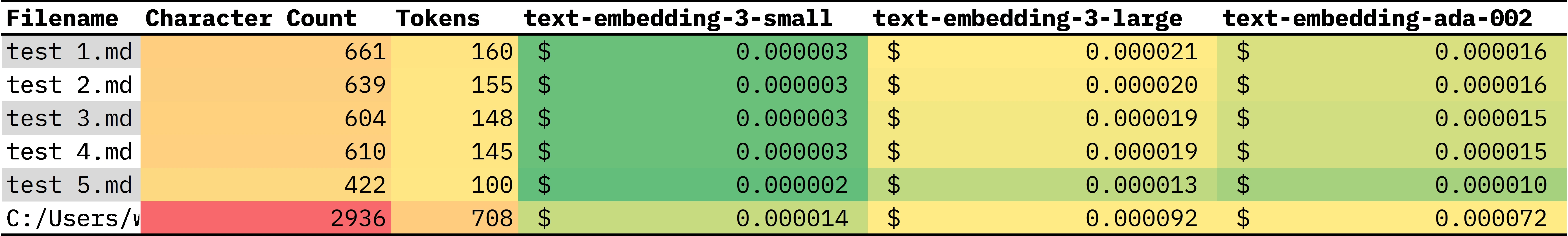

If I want to tokenize all the pages in my graph, then I need to iterate through the text of each page

If I have to do that anyways, then I may as well count the characters along the way

If I do both, then I also increment a total counter to get a total count of characters and tokens for my graph

If have the token counts, I can estimate the cost of embedding for OpenAI’s text-embedding models

Outputting the data as a csv file link h2

If I output this data as a csv, I can manipulate and format the data. For instance, with conditional formatting:

Summary link h2

This is my answer to efficiently managing large texts within the note-taking tool Logseq , particularly when dealing with extensive book highlights and other sizable content sources, like automatic imports of highlights of large articles from Read-Later apps like Omnivore .